Senior UX Researcher · 6+ years

Research that informs

what gets built —

and how.

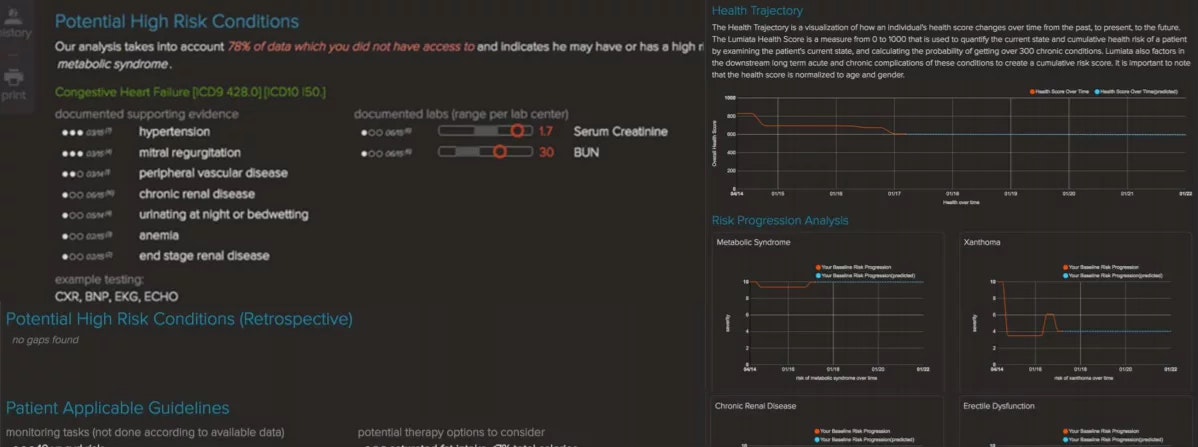

Bad bets on what users want are expensive. I find out what's actually true — what users need, where designs break down, and what's worth building next.

I've done this across AI, SaaS, crypto, and consumer products, from early discovery through rigorous design evaluation to post-launch diagnosis. The output is always the same: evidence your team can act on.

research experience

product types

post-launch